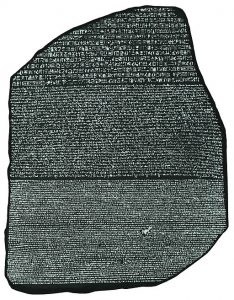

We are living in the era of Big Data but the problem of course is that the bigger our data sets become the slower even simple search operations get. I will now show you a trick that is the next best thing to magic: building a search function that practically doesn’t slow down even for large data sets… in base R!

We are living in the era of Big Data but the problem of course is that the bigger our data sets become the slower even simple search operations get. I will now show you a trick that is the next best thing to magic: building a search function that practically doesn’t slow down even for large data sets… in base R!

Continue reading “Hash Me If You Can”

Month: January 2019

If wealth had anything to do with intelligence…

…the richest man on earth would have a fortune of no more than $43,000! If you don’t believe me read this post!

…the richest man on earth would have a fortune of no more than $43,000! If you don’t believe me read this post!

Continue reading “If wealth had anything to do with intelligence…”

Understanding the Magic of Neural Networks

Everything “neural” is (again) the latest craze in machine learning and artificial intelligence. Now what is the magic of artificial neural networks (ANNs)?

Continue reading “Understanding the Magic of Neural Networks”

Understanding the Maths of Computed Tomography (CT) scans

Noseman is having a headache and as an old-school hypochondriac he goes to see his doctor. His doctor is quite worried and makes an appointment with a radiologist for Noseman to get a CT scan.

Continue reading “Understanding the Maths of Computed Tomography (CT) scans”

Check Machin-like Formulae with Arbitrary-Precision Arithmetic

Happy New Year to all of you! Let us start the year with something for your inner maths nerd 🙂

Continue reading “Check Machin-like Formulae with Arbitrary-Precision Arithmetic”