It was November last year when I seriously started blogging and it is time to share with you some experiences and highlights before the summer break… so read on!

Continue reading “Summer Break: A Look back… and ahead”

Month: July 2019

Learning Data Science: Understanding and Using k-means Clustering

A few months ago I published a quite popular post on Clustering the Bible… one well known clustering algorithm is k-means. If you want to learn how k-means works and how to apply it in a real-world example, read on…

Continue reading “Learning Data Science: Understanding and Using k-means Clustering”

Reinforcement Learning: Life is a Maze

It can be argued that the most important decisions in life are some variant of an exploitation-exploration problem. Shall I stick with my current job or look for a new one? Shall I stay with my partner or seek a new love? Shall I continue reading the book or watch the movie instead? In all of those cases, the question is always whether I should “exploit” the thing I have or whether I should “explore” new things. If you want to learn how to tackle this most basic trade-off read on…

Continue reading “Reinforcement Learning: Life is a Maze”

Teach R to read handwritten Digits with just 4 Lines of Code

What is the best way for me to find out whether you are rich or poor, when the only thing I know is your address? Looking at your neighbourhood! That is the big idea behind the k-nearest neighbours (or KNN) algorithm, where k stands for the number of neighbours to look at. The idea couldn’t be any simpler yet the results are often very impressive indeed – so read on…

Continue reading “Teach R to read handwritten Digits with just 4 Lines of Code”

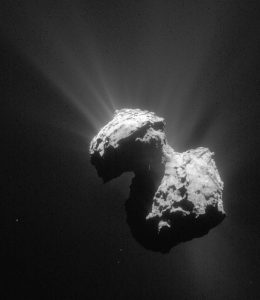

Creating a Movie with Data from Outer Space in R

The Rosetta mission of the European Space Agency (ESA) is one of the greatest (yet underappreciated) triumphs of humankind: it was launched in 2004 and landed the spacecraft Philae ten years later on a small comet, named 67P/Churyumov–Gerasimenko (for the whole timeline of the mission see here: Timeline of Rosetta spacecraft).

ESA provided the world with datasets of the comet which we will use to create an animated gif in R… so read on!

Continue reading “Creating a Movie with Data from Outer Space in R”