Over the course of the last two and a half years, I have written over one hundred posts for my blog “Learning Machines” on the topics of data science, i.e. statistics, artificial intelligence, machine learning, and deep learning.

I use many of those in my university classes and in this post, I will give you the first part of a learning path for the knowledge that has accumulated on this blog over the years to become a well-rounded data scientist, so read on!

Continue reading “Learning Path for “Data Science with R” – Part I”

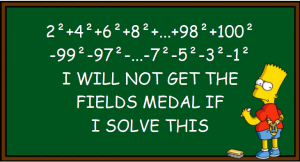

This time we want to solve the following simple task with R: Take the numbers 1 to 100, square them, and add all the even numbers while subtracting the odd ones!

This time we want to solve the following simple task with R: Take the numbers 1 to 100, square them, and add all the even numbers while subtracting the odd ones!