One of the biggest problems of the COVID-19 pandemic is that there are no reliable numbers of infections. This fact renders many model projections next to useless.

If you want to get to know a simple method how to roughly estimate the real number of infections and expected deaths in the US, read on!

As we have seen many times on this blog: simple doesn’t always mean inferior, it only means more comprehensible! The following estimation is based on a simple idea from an article in DER SPIEGEL (H. Dambeck: Was uns die Zahl der Toten verrät).

The general idea goes like this:

- The number of people having died from COVID-19 is much more reliable than the number of infections.

- Our best estimate of the true fatality rate of COVID-19 still is 0.7% of the number of infected persons and

- we know that the time from reporting of an infection to death is about 10 days.

With this knowledge, we can infer the people that got actually infected 10 days ago and deduce the percentage of confirmed vs. actually infected persons:

# https://en.wikipedia.org/wiki/Template:2019%E2%80%9320_coronavirus_pandemic_data/United_States_medical_cases

new_inf <- c(1, 1, 1, 2, 1, 1, 1, 3, 1, 0, 2, 0, 1, 4, 5, 18, 15, 28, 26, 64, 77, 101, 144, 148, 291, 269, 393, 565, 662, 676, 872, 1291, 2410, 3948, 5417, 6271, 8631, 10410, 9939, 12226, 17050, 19046, 20093, 19118, 20463, 25396, 26732, 28812, 32182, 34068, 25717, 29362)

deaths <- c(0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 4, 3, 2, 0, 3, 5, 2, 5, 5, 6, 4, 8, 7, 6, 14, 21, 26, 52, 55, 68, 110, 111, 162, 225, 253, 433, 447, 392, 554, 821, 940, 1075, 1186, 1352, 1175, 1214)

data <- data.frame(new_inf, deaths)

n <- length(new_inf)

shift <- function(x, n = 10){

c(x[-(seq(n))], rep(NA, n))

}

data$real_inf <- shift(round(data$deaths / 0.007))

data$perc_real <- round(data$new_inf / data$real_inf, 4)

data

## new_inf deaths real_inf perc_real

## 1 1 0 0 Inf

## 2 1 0 0 Inf

## 3 1 0 0 Inf

## 4 2 0 0 Inf

## 5 1 0 143 0.0070

## 6 1 0 143 0.0070

## 7 1 0 571 0.0018

## 8 3 0 429 0.0070

## 9 1 0 286 0.0035

## 10 0 0 0 NaN

## 11 2 0 429 0.0047

## 12 0 0 714 0.0000

## 13 1 0 286 0.0035

## 14 4 0 714 0.0056

## 15 5 1 714 0.0070

## 16 18 1 857 0.0210

## 17 15 4 571 0.0263

## 18 28 3 1143 0.0245

## 19 26 2 1000 0.0260

## 20 64 0 857 0.0747

## 21 77 3 2000 0.0385

## 22 101 5 3000 0.0337

## 23 144 2 3714 0.0388

## 24 148 5 7429 0.0199

## 25 291 5 7857 0.0370

## 26 269 6 9714 0.0277

## 27 393 4 15714 0.0250

## 28 565 8 15857 0.0356

## 29 662 7 23143 0.0286

## 30 676 6 32143 0.0210

## 31 872 14 36143 0.0241

## 32 1291 21 61857 0.0209

## 33 2410 26 63857 0.0377

## 34 3948 52 56000 0.0705

## 35 5417 55 79143 0.0684

## 36 6271 68 117286 0.0535

## 37 8631 110 134286 0.0643

## 38 10410 111 153571 0.0678

## 39 9939 162 169429 0.0587

## 40 12226 225 193143 0.0633

## 41 17050 253 167857 0.1016

## 42 19046 433 173429 0.1098

## 43 20093 447 NA NA

## 44 19118 392 NA NA

## 45 20463 554 NA NA

## 46 25396 821 NA NA

## 47 26732 940 NA NA

## 48 28812 1075 NA NA

## 49 32182 1186 NA NA

## 50 34068 1352 NA NA

## 51 25717 1175 NA NA

## 52 29362 1214 NA NA

We see that only up to 10% of actual infections are being officially registered (although fortunately this ratio is growing). Based on this percentage, we can extrapolate the number of actual infections from the number of confirmed infections and multiply it by the death rate to arrive at the number of projected deaths for the next 10 days, i.e. over the Easter weekend:

# how many are actually newly infected? (real_inf <- round(tail(data$new_inf, 10) / mean(data$perc_real[(n-12):(n-10)]))) ## [1] 219436 208788 223477 277350 291940 314656 351460 372057 280855 320663 # how many will die in the coming 10 days? round(real_inf * 0.007) ## [1] 1536 1462 1564 1941 2044 2203 2460 2604 1966 2245

Unfortunately, the numbers do not bode well: this simple projection shows that, with over 300,000 new infections per day, there is a realistic possibility to break the 2,000 deaths-per-day barrier at Easter.

Remember: this is not based on some fancy model but only on the numbers of people that probably got infected already! This is why this method cannot project beyond the 10-day horizon, yet should be more accurate than many a model tossed around at the moment (which are mainly based on mostly unreliable data).

We will soon see how all of this pans out… please share your thoughts and your own calculations in the comments below.

I wish you, despite the grim circumstances, a Happy Easter!

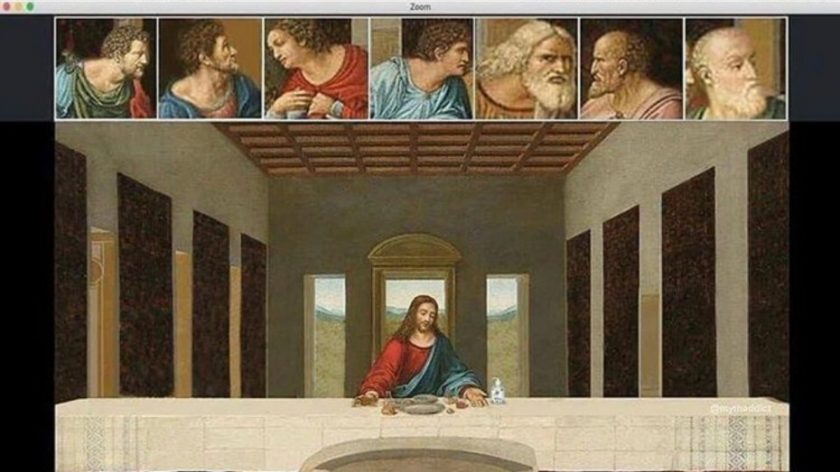

…and heed what Jesus would do in times of social distancing!

UPDATE April 14, 2020

Unfortunately, my prediction of breaking the 2,000 deaths-per-day barrier became true.

Thanks for the post.

While sometime I say to myself, I prefer to be imperfect than flying blind. A simple math could be a tool for public health decision making (to get the sense of the reality) on the ground. I did a simple math a few weeks ago (inspired by a post from tomas pueyo)—

Take death as an input to hypothetically calculate the number of true cases. As we know it takes approximately (on average) 17.3 days (source: https://midasnetwork.us/covid-19/) for a person to go from catching the virus to dying. That means the person who died on 18 March 2020 in “X” country probably he got infected around 01 March 2020. For this scenario, let’s use case fatality rate 1% (although it’s higher- WHO estimated 3.4 as of 03 March 2020). That means that, around 01 March 2020, there were already around ~100 cases in the country (of which only one ended up in death 17.3 days later).

Now, use the average doubling time for the coronavirus (time it takes to double cases, on average). It’s 6.4 (the epidemic doubling time was 6·4 days with 95% CI 5·8–7·1 as presented https://www.thelancet.com/journals/lancet/article/PIIS0140-6736(20)30260-9/fulltext). That means that, in the 17 days it took this person to die, the cases had to multiply by ~ 6 (=2^ (17/6.4)) cases. That means that, if you are not diagnosing all cases, one death today means 600 true cases today — we really don’t know the number of true cases. However, we may see every six days, our cases have been doubled.

What’s your thought on this? Anyway, I am learning so much from you. Keep it up.

Regards,

kibria

Interesting, taking the mean of the ratio of the last three pairs seems to corroborate this conclusion:

Yeah… that’s a good catch. As we move on, we can further evaluate this calculation.

By the way, my colleague and I have developed a COVID surveillance dashboard (http://www.globalhealthanalytics.info/) and we are still working to improve the system – more analytics and simple modelling features are on the way.

Thanks,

kibria

Thank you for sharing this… I will check back from time to time but if there are major enhancements please share this with us too here – Keep up the good work!

…just had another idea: did you do it with Shiny? If yes, why not publish a guest post here explaining how you did it? Would be interesting for many readers of this blog!

Dear Holger, in a volunteer-based public effort, Nibras Ar Rakib – a young brilliant developer who worked with me on this COVID dashboard (http://www.globalhealthanalytics.info/). We used the JHU dataset. Although I have done all the analyses with R in the first place to see how’s it looks like which helped me later to develop a design concept for this site. Then, Nibras used a different technology stack (mainly Python and Javascript) to make it a reality. Not sure whether this experience will qualify to publish a guest post!

Thanks for your interest!

Regards,

kibria

We can be reached at the below links:

https://www.linkedin.com/in/golamkibriamadhurza/

https://www.linkedin.com/in/nibras-ar-rakib-8012b260/

“The mean time from illness onset to death—adopted from a previous study [14]—was estimated at 20.2 days (95% CI: 15.1, 29.5)”

http://www.mdpi.com/2077-0383/9/2/523/htm

… which still does not include the time from infection to onset of illness (incubation time: ~5 days in most cases)….

Very attentive: you are correct, it should be “the time from reporting of an infection to death is about 10 days”, which is the relevant number. I corrected it in the text.

Thank you!

This is simply poor abstraction and simplification. The death rate is NOT 0.7% overall – it is taken from a specific set of country example but different countries have vastly different death rates, dependent in part on how early and how aggressive their intervention strategies were. Look at Germany and at Taiwan and even at Japan. Then look at Italy and Spain and the USA. You simply cannot roll out a single death rate parameter and then shoot from the hip and estimate actual cases everywhere. No way.

After having scanned many relevant papers on the topic I still think that 0.7 is the single best number for the true overall fatality rate you can come up with. I understand that it depends on age, but why should it depend on countries per se?

The only reason I see are different healthcare systems that are sooner or later getting overwhelmed but aside from that viruses don’t discriminate between different nationalities. (The different approaches countries pursue only changes the velocity of new infections, not the fatality rate of infected persons.) In my opinion the hugely different fatality rates mainly depend on the rate of undetected infections.

So, I still think that the model does make sense… but we will soon see how it pans out (I of course hope that there will be considerably fewer deaths!).

UPDATE April 14, 2020

Unfortunately, my prediction of breaking the 2,000 deaths-per-day barrier became true.

Some thoughts:

At some point enough people will be infected (1/2 the population) that many conclusions will either be wrong or unverifiable–for example, when half a population is infected one won’t be able to distinguish between the effects of social distancing or the reduction of the number of possible infections remaining.

Also, since this is a diffusion problem meaning that the spread of the virus happens in physical space the boundaries around points of infection will affect the rate of spread.

Lastly, we will not know for some time whether the death rate was affected by the level of emergency care–so some deaths might not be attributable to the lethality of the virus.

Yes, I agree, there are many unknowns and systematic problems. What confuses me is that there seems to be such a steep learning curve, also for professional epidemiologists. I would have thought that there were more emergency protocols at the ready.

As I wrote, the biggest problem everywhere is data quality: infection numbers seem to be little more than accidental and the idea of representative testing by random sampling seems to have emerged only recently! If not, they at least did a lousy job of communicating what has to be done to lift data quality.

The whole world now (literally!) relies on those numbers and as we all know: garbage in – garbage out, this is also (and especially) true for data science! We are all together in this blind flight with an uncertain ending.

Thanks again Holger!.

Your entry again inspired me to see what is our situation in Spain (Madrid in particular)…

https://www.linkedin.com/feed/update/urn:li:activity:6654425713229254656/

Thank you, Carlos, highly appreciated!

The infection fatality rate is derived from the data collected and analyzed from Wuhan. For Germany, 1.3% was derived. They also took the number of 14 days from infection to death. Maybe you want to update those numbers for your model. (reference: http://www.uni-goettingen.de/de/document/download/ff656163edb6e674fdbf1642416a3fa1.pdf/Bommer%20&%20Vollmer%20(2020)%20COVID-19%20detection%20April%202nd.pdf)

Thank you, Sebastian: Yes, I know this publication. In fact there are now a lot of different studies with often wildly varying parameters. I still think that 0.7 is the single best estimate for the fatality rate.

Concerning the number of days: we need the period from the showing of symptoms (this is when they get registered) till death. With an incubation period of 4 to 5 days this makes about 10 days.

Hi LM,

This is simple and effective model – as stated, back of an envelope calculation!

I just finished working on a replication of your model for the United Kingdom COVID data set, couple of things different:

1. The UK data set has two sets of death counts (definition below):

a. Number of deaths occurring in hospitals in the UK among patients who have tested positive for coronavirus, based on reports sent to the UK government by hospitals.

b. Number of deaths in England and Wales by date of occurrence where COVID-19 was mentioned on the death certificate, including suspected cases of COVID-19, according to the Office for National Statistics.

2. I increased the true fatality rate to 0.9% as that is the best estimate for the UK currently, according to CEBM at Oxford (https://www.cebm.net/covid-19/global-covid-19-case-fatality-rates/)

I’d like to tweet some of what I produced and credit your blog if that’s ok with you – do you have a Twitter handle I can also credit?

Thanks,

@QaribbeanQuant

That is great, Justin! It is of course absolutely ok with me, I appreciate it. My Twitter handle is @ephorie (although I am not very active there…) – Looking forward to your tweets!

Dear Dr. Holger,

I have executed the codes today (data as of 19 April) for the US and Spain to publish in the dashboard; it seems our death prediction either overestimates or underestimates to a great extent. FYI, with 18th April data (data source: JHU), the actual CFRs for the US and Spain are 5.3 and 10.5 respectively (http://www.globalhealthanalytics.info/). Perhaps, we can’t apply this value either to the “actually newly infected” parameter to predict the deaths. Any thought to come up with any better prediction (more updated infection rate multiplier although it’s sort of back-of-the-envelope calculation)?

Thanks,

Kibria

It might be that the quality of even the deaths data is so bad that the error margin of any prediction is just too large. At the end of the day it’s “garbage in garbage out”, no matter how good your model is. When you e.g. look at the official predictions in the US you will see large swings too.

This is the reason why e.g. in Austria and Germany there are now big projects under way to roll out representative tests to get some idea of how many people are really infected.

Yeah! I agree there are historical data quality issues around COVID. In the case of the US, although the CI 95% (2015.06 – 2261.74) for the predicted period, however, the true value lies outside those bounds (1,561 new deaths reported on 19 April).