Google does it! Facebook does it! Amazon does it for sure!

Especially in the areas of web design and online advertising, everybody is talking about A/B testing. If you quickly want to understand what it is and how you can do it with R, read on!

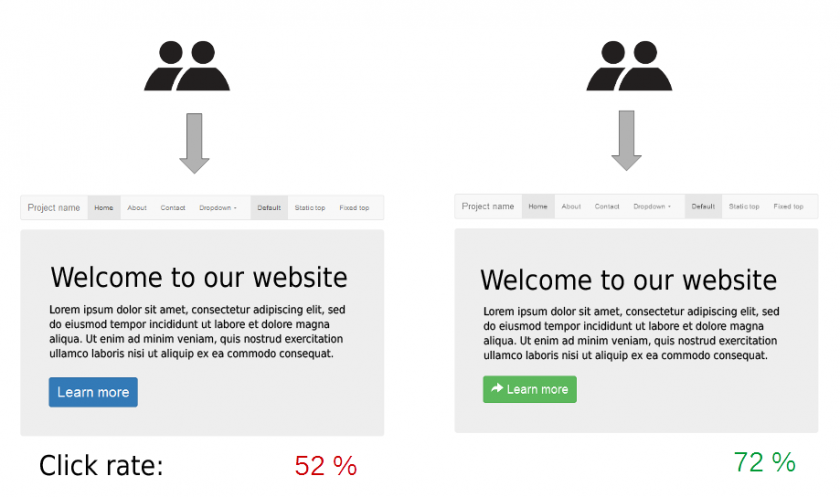

The basic idea of A/B testing is to systematically (and normally automatically) test two different alternatives, e.g. two different web designs, and decide which one does better, e.g. in terms of conversion rate (i.e. how many people click on a button or buy a product):

The bad news is, that you have to understand a little bit about statistical hypothesis testing, the good news is that if you read the following post, you have everything you need (plus, as an added bonus R has all the tools you need already at hand!): From Coin Tosses to p-Hacking: Make Statistics Significant Again! (ok, reading it would make it over one minute…).

To give you a practical example we will use a dataset from DataCamp’s course on “A/B Testing in R” (experiment_data.csv), which shows whether each group (control and test group) either clicked on the respective offer… or not (clicked_adopt_today):

experiment <- read.csv("data/experiment_data.csv")

experiment <- experiment[ , 2:3]

head(experiment, 10)

## condition clicked_adopt_today

## 1 control 0

## 2 control 1

## 3 control 0

## 4 control 0

## 5 test 0

## 6 test 0

## 7 test 1

## 8 test 0

## 9 test 0

## 10 test 1

Let us create two tables with the absolute and the relative proportions:

prop <- table(experiment) prop_abs <- addmargins(prop) prop_abs ## clicked_adopt_today ## condition 0 1 Sum ## control 245 49 294 ## test 181 113 294 ## Sum 426 162 588 prop_rel <- prop.table(prop, 1) prop_rel <- round(addmargins(prop_rel, 2), 2) prop_rel ## clicked_adopt_today ## condition 0 1 Sum ## control 0.83 0.17 1.00 ## test 0.62 0.38 1.00

Now for the actual test: conveniently enough, R has the prop.test function, which tests whether two proportions are significantly different (by performing a so-called Pearson’s chi-squared test under the hood). We only have to put our original table into the function and R does the rest for us:

prop.test(prop) ## ## 2-sample test for equality of proportions with continuity ## correction ## ## data: prop ## X-squared = 33.817, df = 1, p-value = 6.055e-09 ## alternative hypothesis: two.sided ## 95 percent confidence interval: ## 0.1442390 0.2911352 ## sample estimates: ## prop 1 prop 2 ## 0.8333333 0.6156463

Voilà, that was it already! Because the p-value is way below the common threshold of 0.05 the difference is highly significant, so we can reject the null hypothesis (that the difference is just due to chance)!

As a consequence, we would definitely go for the design that was presented to the test group in the future.

One thought on “Learning Data Science: A/B Testing in Under One Minute”